About This Review

This is the third annual 'shallow' review of what’s going on in technical AI safety.

It’s shallow in the sense that 1) we are not specialists in almost any of it and that 2) we only spent about two hours on each entry. Still, among other things, we processed every arXiv paper on alignment, all Alignment Forum posts, as well as a year’s worth of Twitter.

It is substantially a list of lists structuring around 800 links. The point is to produce stylised facts, forests out of trees; to help you look up what’s happening, or that thing you vaguely remember reading about; to help new researchers orient, know some of their options and the standing critiques; and to help you find who to talk to for actual information. We also track things which didn’t pan out.

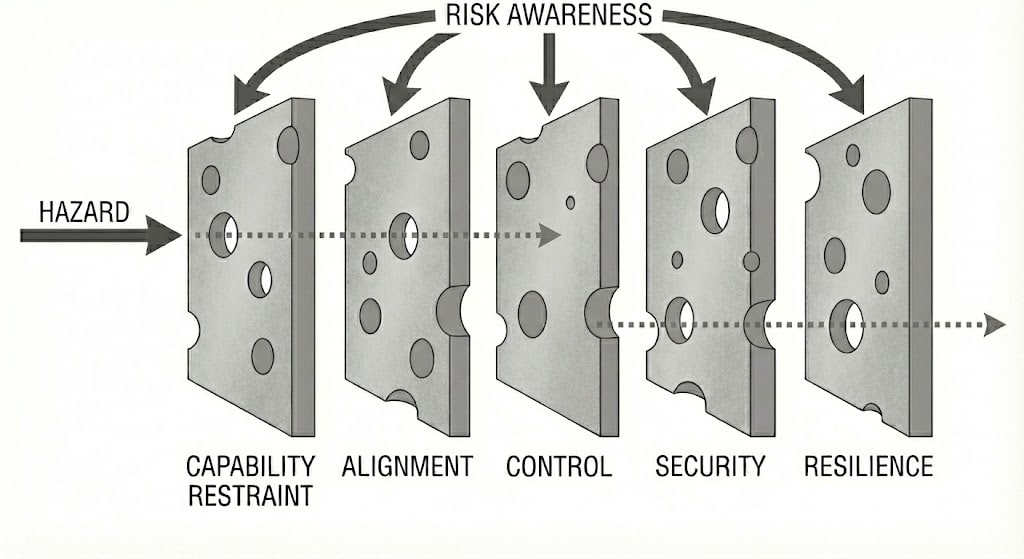

Here, “AI safety” means technical work intended to prevent future cognitive systems from having large unintended negative effects on the world. So it’s capability restraint, instruction-following, value alignment, control, and risk awareness work.

We don’t cover security or resilience at all.

We ignore a lot of relevant work (including most of capability restraint): things like misuse, policy, strategy, OSINT, resilience and indirect risk, AI rights, general capabilities evals, and things closer to “technical policy” and products (like standards, legislation, SL4 datacentres, and automated cybersecurity). We focus on papers and blogposts (rather than say, gdoc samizdat or tweets or Githubs or Discords). We only use public information, so we are off by some additional unknown factor.

We try to include things which are early-stage and illegible – but in general we fail and mostly capture legible work on legible problems (i.e. things you can write a paper on already).

Even ignoring all of that as we do, it’s still too long to read. Here’s a spreadsheet version (agendas and papers) and the github repo including the data and the processing pipeline. Methods down the bottom. Gavin’s editorial outgrew this post and became its own thing.

If we missed something big or got something wrong, please let us know, we will edit it in.

Authors

- Gavin Leech

- Tomáš Gavenčiak

- Stephen McAleese

- Peli Grietzer

- Stag Lynn

- jordine

- Ozzie Gooen

- Violet Hour

- ramennaut

This website was created for the Shallow Review project by QURI, Ozzie and Tomáš.

Acknowledgments

These people generously helped with the review by providing expert feedback, literature sources, advice, or otherwise. Any remaining mistakes remain ours.

Thanks to Neel Nanda, Owain Evans, Stephen Casper, Alex Turner, Caspar Oesterheld, Steve Byrnes, Adam Shai, Séb Krier, Vanessa Kosoy, Nora Ammann, David Lindner, John Wentworth, Vika Krakovna, Filip Sondej, JS Denain, Jan Kulveit, Mary Phuong, Linda Linsefors, Yuxi Liu, Ben Todd, Ege Erdil, Tan Zhi-Xuan, Jess Riedel, Mateusz Bagiński, Roland Pihlakas, Walter Laurito, Vojta Kovařík, David Hyland, plex, Shoshannah Tekofsky, Fin Moorhouse, Misha Yagudin, Nandi Schoots, Nuno Sempere, and others for helpful comments.

Epigram

No punting — we can’t keep building nanny products. Our products are overrun with filters and punts of various kinds. We need capable products and [to] trust our users.

Over the decade I've spent working on AI safety, I've felt an overall trend of divergence; research partnerships starting out with a sense of a common project, then slowly drifting apart over time… eventually, two researchers are going to have some deep disagreement in matters of taste, which sends them down different paths.

Until the spring of this year, that is… something seemed to shift, subtly at first. After I gave a talk — roughly the same talk I had been giving for the past year — I had an excited discussion about it with Scott Garrabrant. Looking back, it wasn't so different from previous chats we had had, but the impact was different; it felt more concrete, more actionable, something that really touched my research rather than remaining hypothetical. In the subsequent weeks, discussions with my usual circle of colleagues took on a different character — somehow it seemed that, after all our diverse explorations, we had arrived at a shared space.

Brought to you by the Arb Corporation